Introduction to Python for Data Science¶

Python and Jupyter Notebooks¶

This is a short introductory training session on the use of Python for data science.

Python is a general purpose programming language that uses a collection of libraries for data manipulation, visualisation and statistical analysis. The Python language itself consists of a set of tokens and keywords and a grammar that you can use to explore and understand data from many different sources.

We focus on a common task in data science: import a data set, manipulate its structure, and then visualise the data. We shall use Python and a Jupyter Notebook to accomplish this task.

Jupyter is an environment for developing interactive notebooks that can be used to carry out data science tasks. It can be used with many different programming languages for a variety of tasks, but we shall focus specifically on using Python to do data science with Jupyter.

Much of the functionality that makes Python so useful is its vast collection of libraries. We shall use the Python pandas library to import and manipulate data, and the plotnine package for data visualisation.

This will be an interactive training session, so you should try to follow along with the tutorial.

Jupyter Notebooks¶

A Jupyter notebook consists of a set of input boxes in which one types Python code or text and a corresponding set of output boxes which show the output from each input. We shall create a new notebook for this training session in which you can copy and paste the tutorial material in order to get familiar with the environment.

On the right-hand side of the Jupyter Home Page tab in your browser, there is a new dropdown box. Click on it and select Python 3. This should open up a new tab in your browser containing an empty Jupyter notebook with an empty In [ ] prompt.

Click on the word Untitled next to the Jupyter logo at the top left of the pane. Type pythonintro into the name box and click on Rename. Then click on the floppy disk icon (Save) in the toolbar under the grey menu bar. This gives a name to the notebook (which should now appear to the right of the jupyter logo) and saves the file into your project directory. If you go back to the Jupyter Home page tab, you should see the new file.

Next you should download the training data and put it into your pythonintro directory.

- The data set – titanic.csv

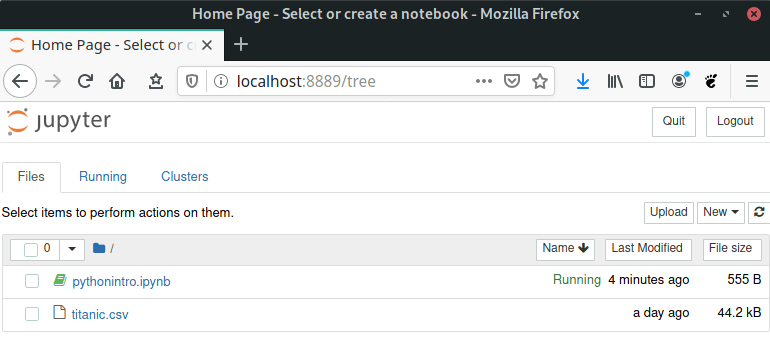

If you refresh your Jupyter Home Page in your browser then it should look like the screen shot below.

You should see the two files that you created.

Return to the blank notebook called pythonintro.ipynb by clicking on its browser tab. We shall use this blank workbook to separate your input from the training material and for you to get familiar with the jupyter system.

If you wish to create an HTML copy of a notebook use the menu File > Download as and select HTML.

Quick tour of Jupyter and Python¶

A Jupyter notebook provides direct access to the Python interpreter. We can use the interpreter as a simple calculator or to interact with the current session to analyse and visualise data.

Type or paste each command shown below into an input box in your blank workbook. Press the Shift and Enter keys together after you have typed each command to send the input to the Python interpreter and see the output that the command generates (or press Run in the toolbar).

This is the basic mechanism for working with Python in a Jupyter notebook. You can change what you type in the box by selecting it with your mouse and adding to what is already written. Press Shift and Enter to re-evaluate the input. Feel free to experiment.

To start, paste in the following command and evaluate.

print('Hello World!')

This is the traditional way to start learning a new programming language. The print command in Python is a function that takes the string of characters 'Hello World!' and returns them as output. You can find help on the print function by typing ?print into an input box and evaluating its content. The documentation appears in a separate box at the bottom of the browser pane.

We can use Python as a calculator by entering and evaluating expressions in an input box. The * below represents multiply, / is used to divide numbers. Type and evaluate

3 * 4 / 2

If an expression becomes complicated then we can assign to a variable, such as x below as a way of holding intermediate results. In this way we can build up complicated expressions and see output. You can see intermediate results using the print command. Paste in the following commands and evaluate:

x = 3

y = 1 + 2

print(y)

x * y

You can do a lot more with Jupyter than just type into cells and see output from Python. The Datacamp Python for data science cheat sheet is a concise starting point for using Python in a notebook for data science.

A tour of the Jupyter user interface can be found by selecting Help > User Interface Tour from the grey menu bar. There are other links available in the Help menu that are well worth going through. The Tutorial in the Python Reference material is a good introduction to the Python programming language.

Exercises¶

You can use your notebook to complete these exercises.

- Calculate the sum of 1 up to 10 by writing an expression and evaluating it in an input cell.

- Using section 5.3 of the Python reference tutorial define a tuple of 1 up to 10 and use the

sumfunction in Python to calculate its sum. Check you get the same answer as the previous question. - Find documentation on the

rangefunction in Python and use it to generate a sequence from 1 up to 10 and calculate its sum. - Investigate the menu items and toolbar on your notebook. Find a way to convert a cell to markdown text. This is a way to document your work. It is how this notebook has been generated.

Loading data into Python¶

We shall use the titanic.csv file to illustrate how we can manipulate a pre-defined data set in Python.

To load the data into Python, we first import the pandas library of functions and use a shortcut called pd to access them. This ensures that functions in different libraries do not interfere with each other if you use them together.

Paste the contents of the following input box into your notebook and evaluate. You should see the output in the table below.

import pandas as pd

titanic = pd.read_csv("titanic.csv")

titanic

Here the function read_csv belongs to the pandas library so we prefix it with pd.. It is a function that loads the CSV (comma separated variables) file into the current Python session in the form of a pandas table of data, which is called a DataFrame.

Each row in the data frame contains data on a single passenger on the Titanic. The output above shows the top 5 and bottom 5 rows in the file.

Exercises¶

- Read the documentation for the pandas function

read_csv. - Study the DataCamp cheat sheet for

pandasand try out some of the comands in your notebook. - Create a copy of the

titanicdata set on the file system calltitanic2.csvusing the function pandas functionto_csv.

Manipulating data¶

The pandas library allows us to manipulate and perform calculations on a DataFrame. Often it is best to build up the manipulation of data in stages so that you can see the effect of each command.

We shall use a form of function piping in order to illustrate the flow of data as we manipulate it. In order to do function chaining like this in Python you must enclose the expression in brackets.

Type the following input into your notebook which calculates the overall survival rate for passengers in the data set.

(

titanic[['Survived']]

.agg('mean')

.mul(100)

)

The expression titanic[['Survived']] selects the single column Survived from the titanic data frame which contains a 1 if the passenger survived and a 0 if they did not. The dot operator . takes the data from the previous line and uses it as input to the function on the current line. The agg function in the pandas library is used for aggregation. In this example, we calculate the mean (or average) of the survived variable. The mul function multiplies each data item by 100 so that we get the survival rate for passengers in percent.

The expression titanic[['Pclass', 'Survived']] selects two columns from the titanic data frame, and the next command groups the table according to passenger class held in column Pclass.

titanic_Pclass_rate = (

titanic[['Pclass', 'Survived']]

.groupby("Pclass")

.agg('mean')

.mul(100)

.rename(columns = {'Survived': 'Survival_Rate'})

.reset_index()

)

titanic_Pclass_rate

The sequence of functions is similar to those shown in the previous calculation. In addition, we rename the Survived column to Survival_Rate and use the reset_index function to convert the results into a simple pandas data frame with no indexing. The output is assigned to the new data frame called titanic_Pclass_rate.

We see the survival rate per ticket class in percent. Thus, the survival rate in first class on this data set was nearly 63%. Those in third class only had a 24% survival rate.

You can find more information on data manipulation using pandas on the DataCamp cheat sheet.

Exercises¶

- Find the survival rate for each sex.

- Find the mean and variance of the survival rate for each passenger class in a single data frame.

- Why are these results not necessarily the mean and variance of the survival rate on the Titanic?

Visualising data¶

Often we can gain additional insight from the data by using visualisation techniques. We shall use the Python package plotnine for this task.

The Python plotnine package is a re-implementation of the R ggplot2 package. The ggplot2 package rivals commercial plotting applications such as Tableau in its plotting capabilities. The package is a language for building plots based on the Grammar of Graphics. Unlike piping with function chaining, plotnine uses the + operator to build up a plot.

As a simple example, type the following into an input box and evaluate. This uses the plotnine function on the data titanic_Pclass_rate, but since we have not assigned any mapping between the data and the plot only a blank grey box is shown.

import plotnine as pn

pn.ggplot(titanic_Pclass_rate)

The plot is built up in stages. The function aes maps data columns in the titanic data frame to the plotnine chart. In the following input, we map the column Pclass to the x-axis and column Survival_Rate to the y-axis. Note that the scales are automatically calculated for you based on the data.

pn.ggplot(titanic_Pclass_rate, pn.aes('Pclass', 'Survival_Rate'))

For the next plot, we select the type of graphic as geom_col, which draws a bar chart. We need to convert the Pclass column to a categorical variable for this to work.

titanic_Pclass_rate['Pclass'] = pd.Categorical(titanic_Pclass_rate['Pclass'])

(pn.ggplot(titanic_Pclass_rate, pn.aes('Pclass', 'Survival_Rate')) +

pn.geom_col()

)

This is now a simple visualisation of the data in the data frame titanic_Pclass_rate. We can obtain a more sophisticated plot by splitting the bars based on the Sex column.

titanic_Sex_Pclass_rate = (

titanic[['Pclass', 'Sex', 'Survived']]

.groupby(['Pclass', 'Sex'])

.agg('mean')

.mul(100)

.rename(columns = {'Survived': 'Survival_Rate'})

.reset_index()

)

titanic_Sex_Pclass_rate['Pclass'] = pd.Categorical(titanic_Sex_Pclass_rate['Pclass'])

titanic_Sex_Pclass_rate['Sex'] = pd.Categorical(titanic_Sex_Pclass_rate['Sex'])

(pn.ggplot(titanic_Sex_Pclass_rate, pn.aes('Pclass', 'Survival_Rate', fill='Sex')) +

pn.geom_col(position='dodge')

)

Thus, we have visualised the survival rate for each sex split by passenger class. The argument position='dodge' of the geom_col function ensures that bars are not stacked on top of each other.

More information on the functionality available is on the plotnine home page.

You can see some more features of plotnine used in the plot below.

(pn.ggplot(titanic_Sex_Pclass_rate, pn.aes('Pclass', 'Survival_Rate', fill='Sex')) +

pn.geom_col(position='dodge') +

pn.xlab("Passenger Ticket Class") +

pn.ggtitle("Passenger Survival Rates on the Titanic") +

pn.theme(legend_position=(0.8, 0.75), legend_background=pn.element_rect(fill='whitesmoke')) +

pn.geom_text(pn.aes(label='Survival_Rate'), position=pn.position_dodge(width=0.9), size=8, va='bottom',

format_string='{0:2.0f}%')

)

Hopefully, this short introduction has given you an idea of the capabilities of Python for doing data science, and some pointers to find out more!

Exercises¶

- Save your notebook on the filesystem to keep a record of what you typed.

- Change the colours in the bars to suit your preferences.

- Produce a version of the plot above with horizontal bars. Why might this make a more compelling visual?