Last Updated on May 23, 2022

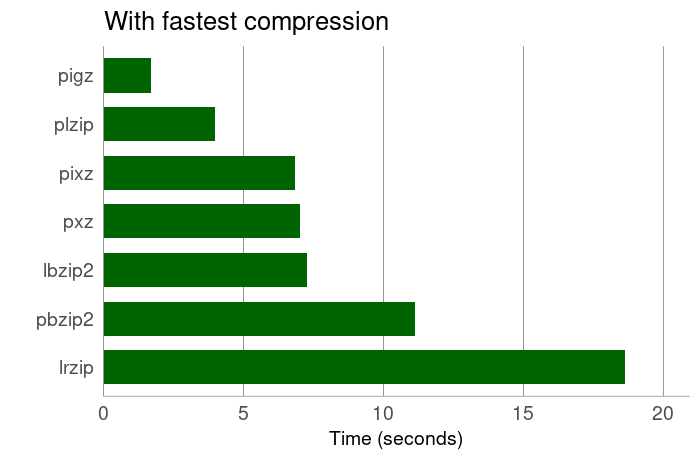

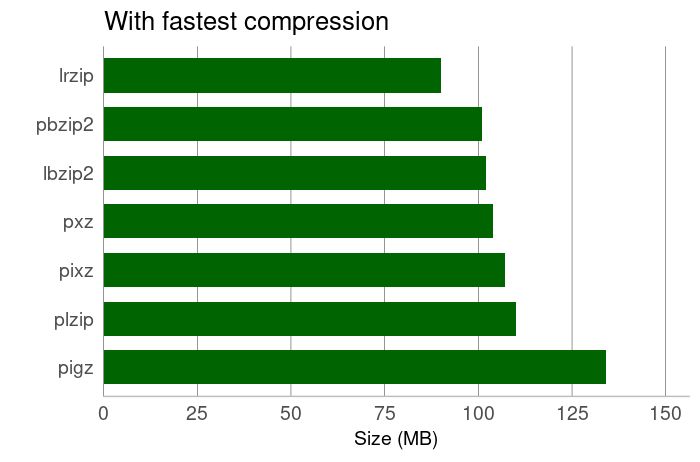

With Fastest Compression

Most of the tools provide a flag to set the level of compression on a scale from 1 to 9. pxz and plzip and pixz scale from 0 to 9. This test uses the lowest available compression option.

All of the multi-core tools made fairly light work of compressing the tarball with their fastest compression option.

If you need to compress large files on a machine with a low powered multi-core machine, the fastest compression might be suitable. pigz compressed the 537MB tarball to 134MB in a whisker under 1.7 seconds. Most of the other tools shaved the tarball to around 100MB, and lrzip compressed the file to a mere 90MB.

Next page: Page 4 – Charts with Best Compression

Pages in this article:

Page 1 – Introduction

Page 2 – Charts with Default Compression

Page 3 – Charts with Fastest Compression

Page 4 – Charts with Best Compression

Page 5 – lrzip with Different Compression Methods

Methodology used for the tests

We took a 537MB tarball of a popular source package. The tarball was copied to RAM (/dev/shm), and the tests ran in RAM on a quad-core CPU without hyper-threading (Core i5-2500K), with no X server running, and under negligible load.

Each test was run three times with the latest version (at the time of writing) of each multi-core compression tool. The average results are recorded in the charts above. The tests show the relative difference between the multi-core compression tools. They are for indicative purposes only.

Learn more about the features offered by the multi-core compression tool. We’ve compiled a dedicated page for each tool explaining, in detail, the features they offer.

| Multi-Core Compression Tools | |

|---|---|

| Zstandard | Fast compression algorithm, providing high compression ratios |

| pigz | Parallel implementation of gzip. It's a fully functional replacement for gzip |

| pixz | Parallel indexing XZ compression, fully compatible with XZ. LZMA and LZMA2 |

| PBZIP2 | Parallel implementation of the bzip2 block-sorting file compressor |

| lrzip | Compression utility that excels at compressing large files |

| lbzip2 | Parallel bzip2 compression utility, suited for serial and parallel processing |

| plzip | Massively parallel (multi-threaded) lossless data compressor based on lzlib |

| PXZ | Runs LZMA compression on multiple cores and processors |

| crabz | Like pigz but written in Rust |

Read our complete collection of recommended free and open source software. Our curated compilation covers all categories of software. Read our complete collection of recommended free and open source software. Our curated compilation covers all categories of software. Spotted a useful open source Linux program not covered on our site? Please let us know by completing this form. The software collection forms part of our series of informative articles for Linux enthusiasts. There are hundreds of in-depth reviews, open source alternatives to proprietary software from large corporations like Google, Microsoft, Apple, Adobe, IBM, Cisco, Oracle, and Autodesk. There are also fun things to try, hardware, free programming books and tutorials, and much more. |

Thank you so much! I’m going to try out some of these.

Some comparison between them would be very usefull

I did a similar study a few years ago and ended up using pbzip2 as my go-to compression utility.

The main reason is that it can do multi-core de-compression as well, unlike pigz.

The compression algorithm is fairly slow, so it works best when you have 30+ cores to throw at it.

Keep in mind that to use pbzip2 to de-compress with multiple cores, you need to compress with pbzip2 first. It adds some hints to the file to let the decompression know how to split up the work properly.

Seems you brought me a solution, thanks !

Do you know if initramfs is using a single threaded kernel bzip2 routine or my /bin/pbzip2 for decompression at runtime ?

Cordialement.

Multicore compression : great ! But … all decompressions are done on a SINGLE core ! Why ?

I tried option to select the number of threads but in vain.

Any idea to do multicore decompression ?

Cordialement.

Do you know zpaqfranz?

Thanks.