Last Updated on March 19, 2024

![]() Our Machine Learning in Linux series focuses on apps that make it easy to experiment with machine learning. All the apps covered in the series can be self-hosted.

Our Machine Learning in Linux series focuses on apps that make it easy to experiment with machine learning. All the apps covered in the series can be self-hosted.

Large Languages Models trained on massive amount of text can perform new tasks from textual instructions. They can generate creative text, solve maths problems, answer reading comprehension questions, and much more.

The recent release of Llama 2 caused consternation among the open source community. With good reason. While Meta and Microsoft labeled Llama 2 as open source, it doesn’t use an Open Source Initiative (OSI) approved license or comply with the Open Source Definition. We have nothing against proprietary software per se. But it’s important to call out instances where multinationals label open source when it’s not. With this in mind, we’re still interested in looking at software which lets us access the Llama 2 models.

Ollama is software in an early stage of development that lets you run and chat with Llama 2 and other models. It’s cross-platform software running under Linux, macOS and Windows. Unlike Llama 2, Ollama actually is open source software, published under the MIT license.

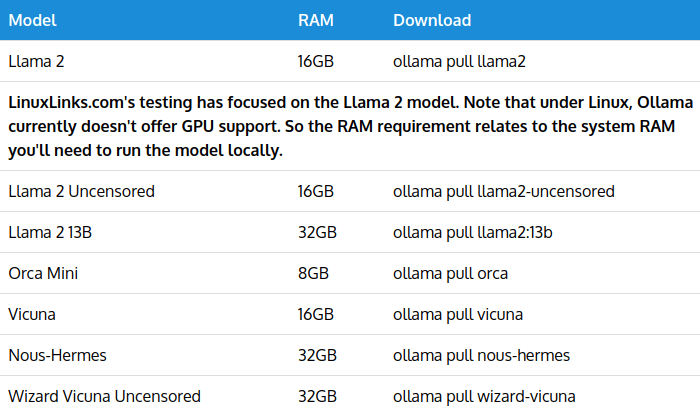

The table below shows the models that are supported by Ollama.

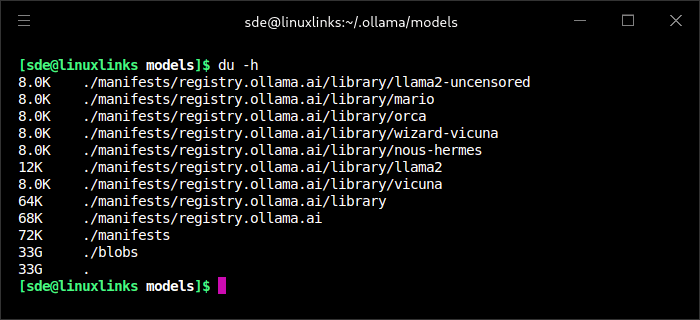

Each model packages bundle weights, config and data into a single portable file. The models are stored in ~/.ollama/models. Downloading all the models uses a hefty chunk of disk space.

In the next page, we’ll take you through the installation steps under Linux. We haven’t tested the software under macOS or Windows. Heh, we’re a Linux site 🙂

Next page: Page 2 – Installation

Pages in this article:

Page 1 – Introduction

Page 2 – Installation

Page 3 – In Operation

Page 4 – Summary