Last Updated on March 19, 2024

Summary

Ollama offers a very simple self-hosted method of experimenting with the latest Llama model. You can access a variety of models with a few simple commands. You’ll be up and running in a few minutes.

Ollama chugs along even on a high spec machine as there’s currently no GPU support under Linux.

There’s the option to customize models by creating your own Modelfile. The customization lets you define temperature and prompt to personalize.

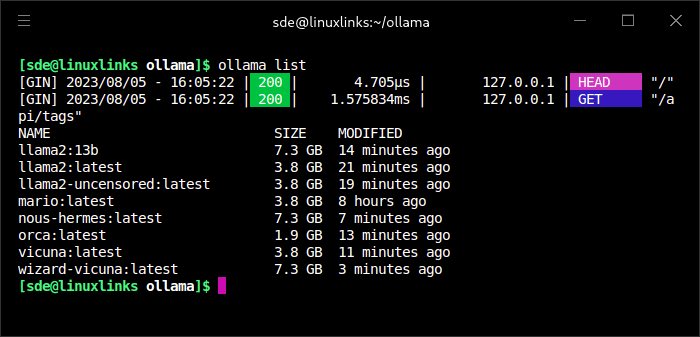

We’re going to experiment with all the models supported on a beefer machine than our test machine’s 12th gen processor. We’ve just downloaded the models on this machine. List the models with $ ollama list

At the time of writing, Ollama has amassed nearly 5k GitHub stars.

Website: github.com/ollama/ollama

Support:

Developer: Jeffrey Morgan

License: MIT License

![]() For other useful open source apps that use machine learning/deep learning, we’ve compiled this roundup.

For other useful open source apps that use machine learning/deep learning, we’ve compiled this roundup.

Ollama is written mainly in C and C++. Learn C with our recommended free books and free tutorials. Learn C++ with our recommended free books and free tutorials.

Page 1 – Introduction

Page 2 – Installation

Page 3 – In Operation

Page 4 – Summary