In Operation

On the first run, we’re prompted to make a few choices:

- Select a vault directory. This can be an existing directory. Only Markdown files are indexed.

- Choose an embedding model. The default mode is Xenova/UAE-Large-V1, but we can also choose Xenova/bge-base-end-v1.5, or Xenova/bge-small-en-v1.5.

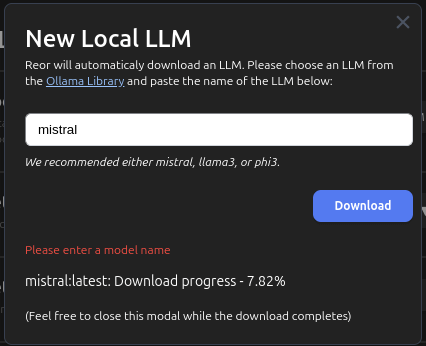

- Attach a LLM. This can be a local LLM, a cloud LLM, or a remote LLM. We went with a local LLM. Either mistral, llama3, or phi3 are recommended.

These selections can be changed in the Settings section of the software.

After completing these steps, the vector database is initialised.

What makes Reor interesting? First it automatically links related ideas, answers questions on your notes, with fast semantic searches. The software automatically links your notes to other notes in the Related Notes sidebar. And you can view the Related Notes to a particular chunk of text by highlighting it and hitting the button that appears. Linking is a strong area. We can create inline links by surrounding text with two square brackets (like in Obsidian)

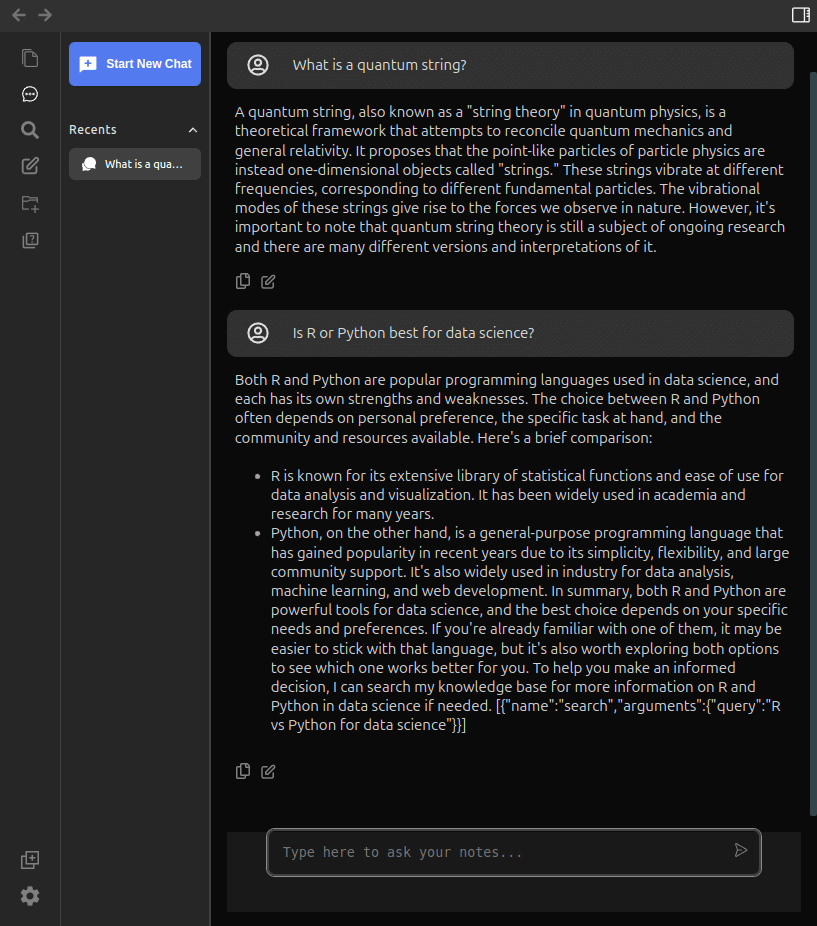

You can chat with a local model or connect to OpenAI models with an API key. Here’s an example chat session using the mistral model (which is being run locally).

Like all AI chat bots, you’ll need to exercise caution. For example we entered the text “podcasts LinuxLinks.com”, and the bot claims that we offer a podcast called “Linux Links Weekly”. That’s complete baloney.

The chat functionality is more sophisticated. For example, you can chat with the current note. This mean you can choose between a regular LLM chat and a Notes chat.

The software also offers AI flashcards. You can generate flashcards from any note.

Pages in this article:

Page 1 – Introduction and Installation

Page 2 – In Operation

Page 3 – Summary