![]() Our Machine Learning in Linux series focuses on apps that make it easy to experiment with machine learning. All the apps covered in the series can be self-hosted.

Our Machine Learning in Linux series focuses on apps that make it easy to experiment with machine learning. All the apps covered in the series can be self-hosted.

Large Languages Models trained on massive amount of text can perform new tasks from textual instructions. They can generate creative text, solve maths problems, answer reading comprehension questions, and much more.

Serge is a chat interface crafted with LLaMA for running GGUF models.

Installation

We evaluated Serge using Manjaro, an Arch-based distro, as well as the ubiquitous Ubuntu 24.10 distro.

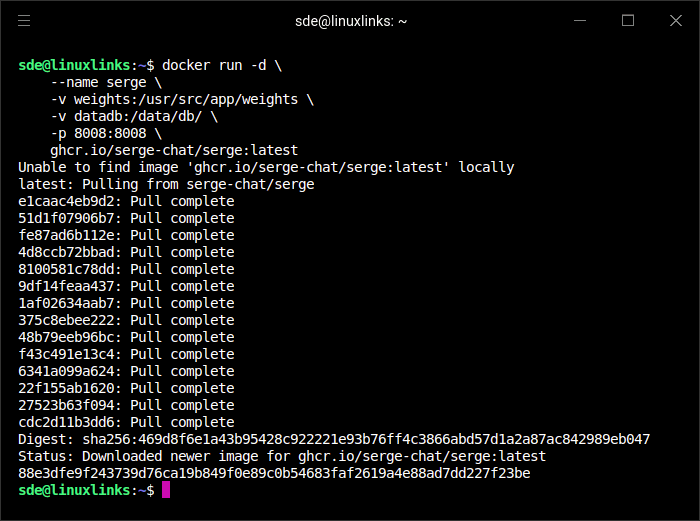

With both distros, installation is a breeze. We used Docker to install and run Serge with Docker. Issue the command:

docker run -d \

--name serge \

-v weights:/usr/src/app/weights \

-v datadb:/data/db/ \

-p 8008:8008 \

ghcr.io/serge-chat/serge:latest

This is what you’ll see.

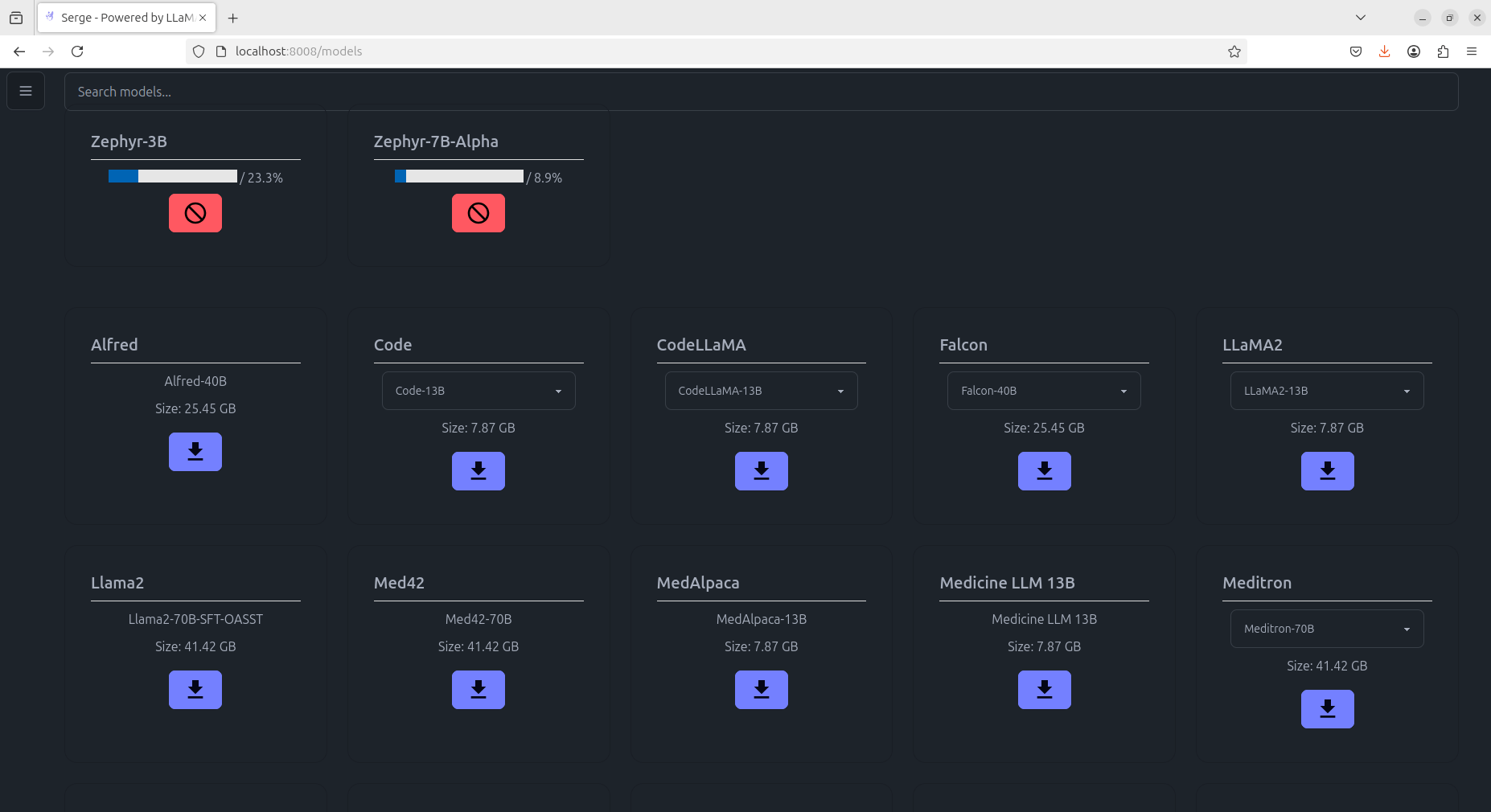

Next point your web browser to http://localhost:8008 so that you can download one or more models. There’s a whole raft of models available.

In the above image, we’re downloading a couple of models. Many of the models are big downloads. Some of the models may not work if your system doesn’t have sufficient RAM. But storing the models locally lets you run the software self-hosted.

The software is cross-platform. Besides Linux, it runs under macOS and Windows.

Next page: Page 2 – In Operation and Summary

Pages in this article:

Page 1 – Introduction and Installation

Page 2 – In Operation and Summary