In Operation

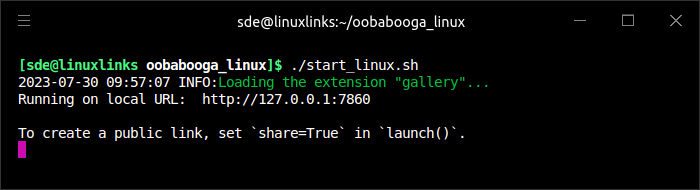

To start the software, run the start_linux.sh shell script.

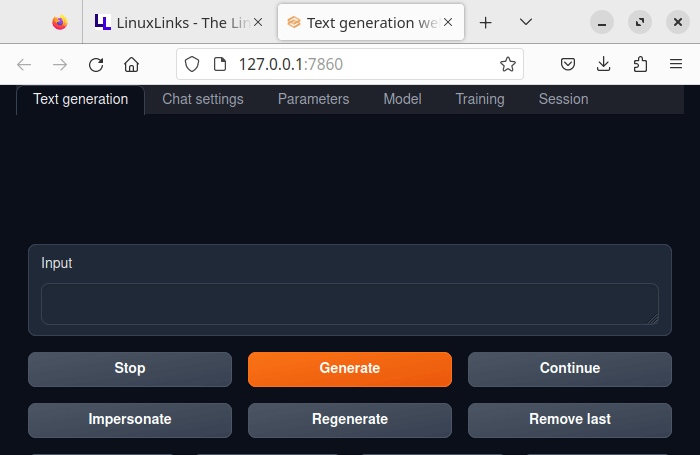

This is what we see in Firefox.

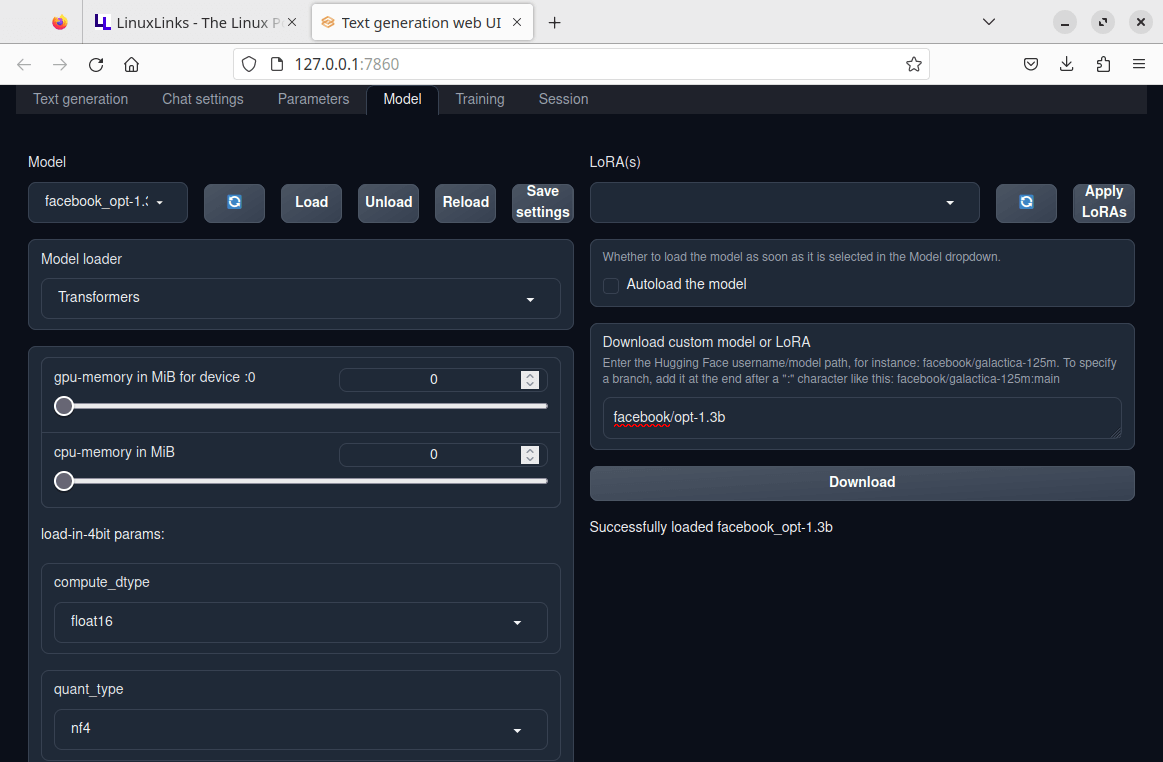

As explained in the Installation section, you’ll need to install a model. Click the Model menu entry at the top of the screen. In the Download custom model or LoRA field, enter the model. We’ll download facebook/opt-1.3b.

We’ve downloaded the facebook_opt-1.3b model and loaded it.

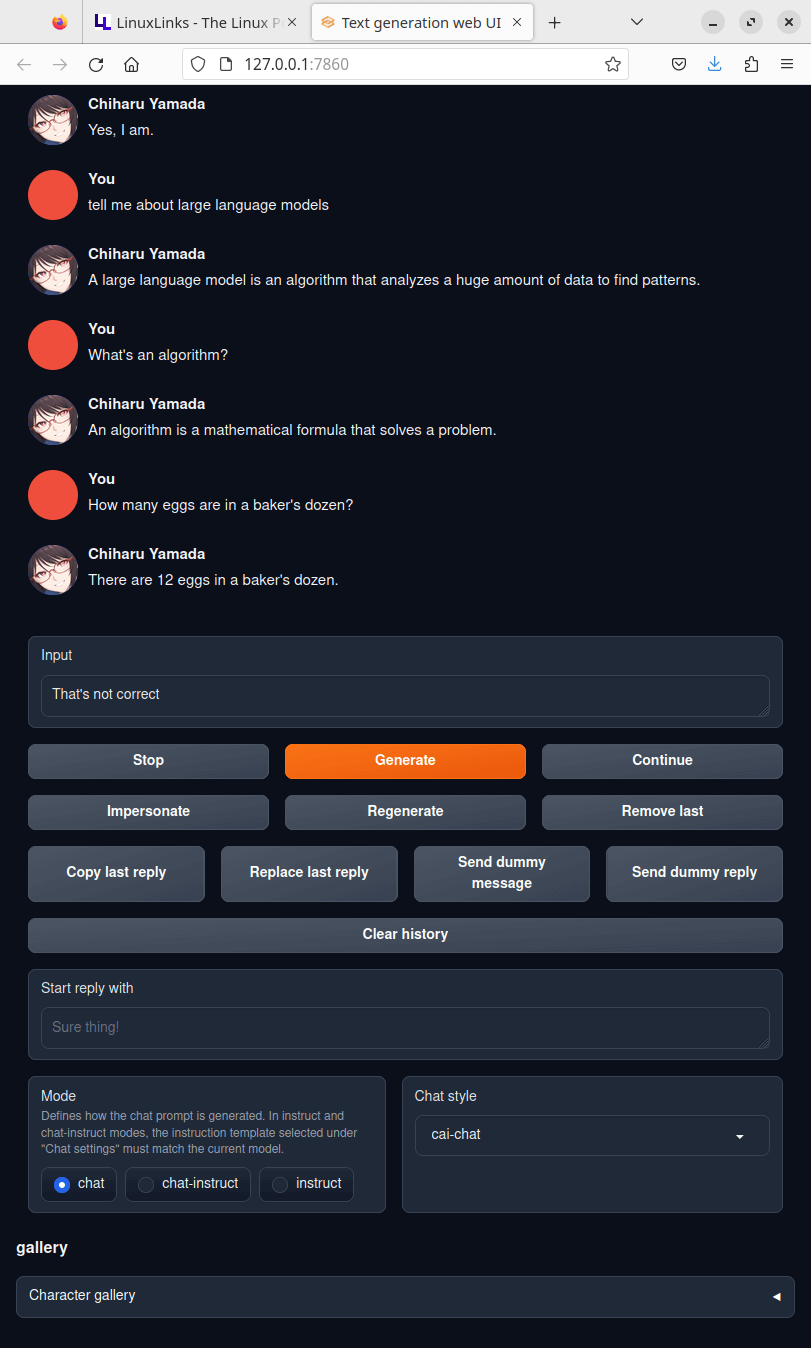

Here’s an example chat with this model.

There are, in fact, 13 eggs in a baker’s dozen. Like many responses from LLMs, accuracy of the responses should never be blindly trusted.

What features are available? An impressive array. Here are the highlights:

- 3 interface modes: default, notebook, and chat.

- Multiple model backends: transformers, llama.cpp, ExLlama, AutoGPTQ, GPTQ-for-LLaMa.

- Dropdown menu for quickly switching between different models.

- LoRA: load and unload LoRAs on the fly, train a new LoRA. One of the techniques that helps reduce the costs of fine-tuning enormously is “low-rank adaptation” (LoRA). With LoRA, you can fine-tune LLMs at a fraction of the cost it would normally take.

- Precise instruction templates for chat mode, including Llama 2, Alpaca, Vicuna, WizardLM, StableLM, and many others.

- Multimodal pipelines, including LLaVA and MiniGPT-4.

- 8-bit and 4-bit inference through bitsandbytes.

- CPU mode for transformers models.

- DeepSpeed ZeRO-3 inference. ZeRO-Inference pins the entire model weights in CPU or NVMe (whichever is sufficient to accommodate the full model) and streams the weights layer-by-layer into the GPU for inference computation.

- Extensions.

- Custom chat characters.

- Very efficient text streaming.

- Markdown output with LaTeX rendering, to use for instance with GALACTICA.

Summary

Text generation web UI offers a trouble-free way to experiment with a wide range of LLMs. It’s easy-peasy to install.

The software lets you experiment with lots of language models. We are particularly fascinated with GALACTICA, a general-purpose scientific language model. It’s trained on a large corpus of scientific text and data and performs scientific NLP tasks at a high level.

At the time of writing, Text generation web UI has amassed nearly 20k GitHub stars.

Website: github.com/oobabooga/text-generation-webui

Support:

Developer: oobabooga

License: GNU Affero General Public License v3.0

![]() For other useful open source apps that use machine learning/deep learning, we’ve compiled this roundup.

For other useful open source apps that use machine learning/deep learning, we’ve compiled this roundup.

Text generation web UI is written in Python. Learn Python with our recommended free books and free tutorials.

Pages in this article:

Page 1 – Introduction and Installation

Page 2 – In Operation and Summary

This software lives up to its hype!